From the Desk of

What are computers waiting for?

In 2003, on the eve of the then-largest tech IPO in history, Google was powered by about 15,000 computers worldwide. In aggregate, those machines had about 10 TFLOPS1 of compute capacity.

Today, the MacBook Air puts 4.6 TFLOPS on your lap —plus ~10X faster networking and all-day battery life. Apple’s most powerful system-on-a-chip packs 28.4 TFLOPS into an 8 pound machine that draws much less power than a microwave oven.

We all take for granted that computers constantly get more powerful, and that this has been going on for decades, but take a moment to try to put your finger on the year when your Mac started feeling between ~0.5X and 3X as powerful as Google was in 2003.

I mean don’t think about it too hard, it’s a joke. (Send me an email if you want to be the first person I’ve heard of who believes their Mac feels more powerful than Google did at any point in history.)

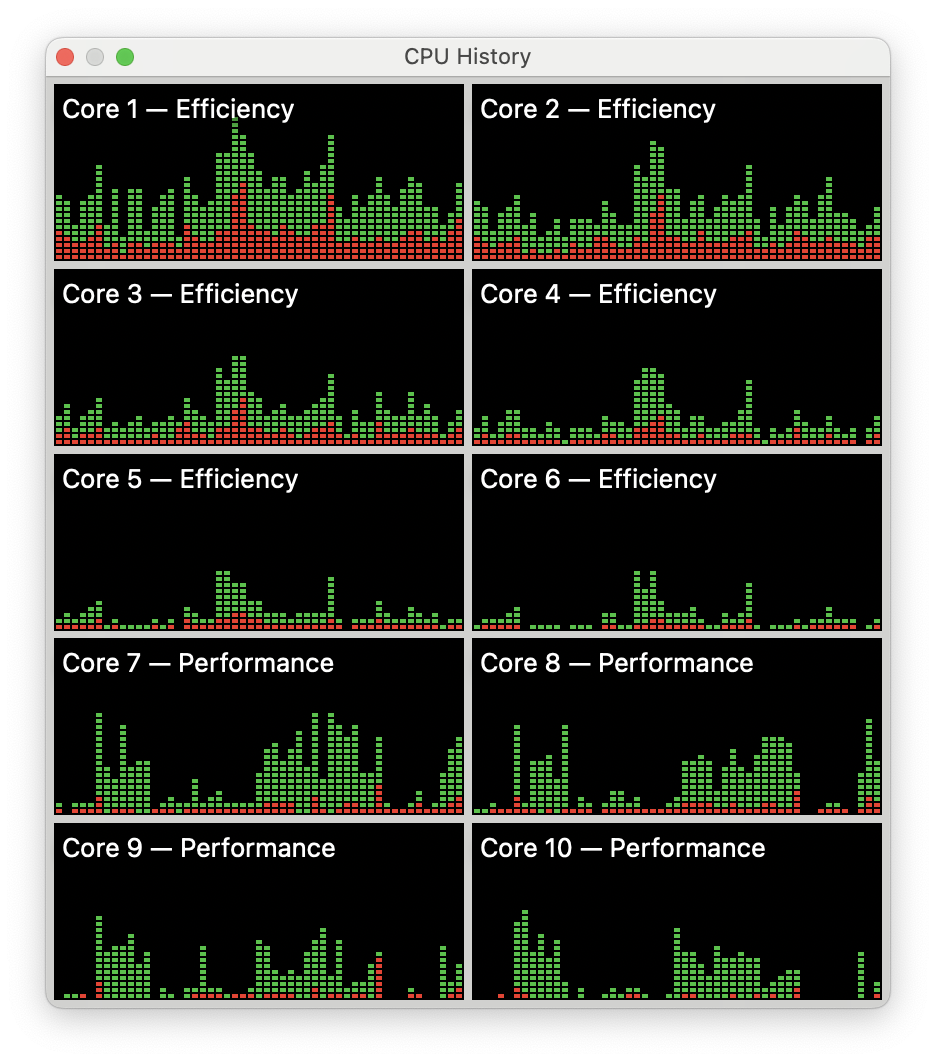

Your 2025 Mac might not feel as powerful as Google did 20 years ago, but on one metric it certainly stands equal to Google then, equal to Google now, and back to the original 1984 Macintosh, even back to the IBM mainframes that kicked off the information age: computers are all waiting for instructions, frustratingly, as patiently as they ever have.

For all their wonderfully powerful potential, computers are stuck in the era of being mere tools. A bicycle for the mind that remains merely a potent heap of metal without a skilled rider to hold the handlebars, push the pedals, counterbalance the symphony of physical forces.

We’ve made oceans of progress, don’t get me wrong, it’s magically easier to use Google to search ~all of human knowledge than it is to ride a bike, but it’s nonetheless a skill that must be acquired, developed, and with a distribution of which that is, to say the least, lumpy.

Your ability to benefit from the power of your computer — through to the power of the web and beyond— is entirely dependent on your generative ability to bring to bear your analytical skills and creative talents to formulate queries and sift through search results towards the production of a richer understanding destine to be trapped yet still inside your skull.

LLMs certainly improve on the backend experience of sifting through search results, mercifully skipping you past the cavalcade of vulgarity that has overtaken too much of the web. But wonderful as it is to consume information that’s been pre-chewed and distilled by an LLM, the output’s only as good as the prompt the machine still waits for you to provide.

They’re aesthetes without taste or an interest in beauty, they just want software that’s optimized to use our computers as little as possibly can be done. Ironically, Apple itself, and the community of developers whose work makes Apple’s platforms valuable, are teeming with software engineers hell bent for leather on making their software do very, very little.

It hasn’t always been this way. The original 1984 Macintosh was famously more ambitious than its hardware could reasonably support, and the software engineers on that team are still legends today for how expertly they economized the meager compute resources available to deliver a machine that felt years ahead of its time. Then again when the iPhone was announced there were legions of industry insiders who doubted the demos were real until they got to touch the devices on launch day.

These seminal lightning strikes are followed by long stretches where progress is slow. In the beginning it’s easy to attribute to hardware still catching up to the promise of the new software paradigm. Eventually, but inevitably it seems, developers get so used to hardware limits that they begin to limit their own imaginations. And the platform stagnates while the hardware catches up …then takes the lead again…

Which is where we find ourselves today: the folks leading the Mac and iPhone projects are operating like stewards of platforms where the job is to max out the value of the platform for its operator —versus maxing out the power the hardware brings to bear for users.

Software that doesn’t max out the hardware it runs on is actively holding back the creatives, writers, musicians— the very best and brightest among us —who make the art that lights up our brains. Apple’s platforms literally slow down the pace of human progress by banning developers from building increasingly powerful tools that can free creatives from the morass of manual computer tasks so that they can do more of whatever it is they call “my life’s work”.

This timidity manifests clearly in Apple’s flagging hardware sales. When software doesn’t push hardware past its comfortable limits, users find few reasons to upgrade. Apple, in turn, resorts to increasingly hostile policies— leveraging control and artificial platform constraints —to sustain revenue and please Wall Street.

When computers were getting started, everything in the world happened outside their screens. And that was true for decades, but eventually software started eating the world, and today’s world is a near perfect inversion of the dawn of computing. Nearly everything we do is entirely within, intermediated by, or observed by the digital environment.

When I was a computer science student, we were taught the golden rule: “delay work.” Compute resources were limited so tasks were deferred until absolutely necessary, optimizing for scarcity rather than abundance, and deterministic software could only go so far towards predicting the future anyhow. But over the last decade, the quiet revolution of machine learning has steadily transformed into an explosive era of predictive computation. Today software could anticipate user actions, guess needs before they manifest explicitly, preemptively take reversible actions, and it’s time to rewrite the rules of what we allow our computers to do.

For users, computing has long felt like an infinite series of pop-quizzes, where getting the output you want depended upon inputing the perfect incantation— from typing precise commands into a terminal, jotting shorthand keywords into Google, or carefully crafting a prompt for ChatGPT —each interaction depends on the user’s input expertise, leaving failure to get the desired output feeling like oh well I guess I’m just bad at computers.

As always, the future is already here, it’s just not evenly distributed yet. Social media’s algorithmic feeds point clearly toward a future in which computing proactively anticipates user desires rather than waiting passively for explicit instructions. These platforms have magnitudes more users than personal computers ever have, eliciting obsessive engagement so profound it often invites serious analogies to addiction2.

You’re free to be skeptical, cynical, or disdainful toward social media and/or algorithmic feeds. But I’m too simple to ignore the overwhelming evidence: billions of people voting with their time and attention clearly prefer passively personalized content. Harnessing the power of predictive algorithms and bringing them back to personal computing will revolutionize creative workflows, unlocking a Cambrian Explosion event for software.

By finally solving software’s intimacy problem, we get a chance to build computers that serve people without waiting— politely, patiently —indefinitely for their users to input the magic phrase that unlocks all that power idling away on our laps.